Continuing from my earlier post ‘Security in SQL Server – Implementing Encryption‘, where I had described the implementation of encryption in SQL Server and briefed on it’s process workflow, here I am once again posting on the same topic with some screenshots to make it easier for you, and for your convenient understanding and smoother implementation.

SQL Server Data Collector – A monitoring tool for free.

Taking a close look at the monitoring features available in SQL Server 2008 and higher versions, there is quite an interesting and easy utility available for usage.

It’s called the Data Collector.

Below mentioned are some basic details on this facility, which is readily available in SQL Server and how we can enable the same.

What is Data Collector?

Data Collector is an optional feature of SQL Server 2008 that collects and stores information about SQL Server’s 2008’s status and performance over time, using pre-defined data collection sets.

What version of SQL Server supports it?

SQL Server 2008 and above features this monitoring utility.

How it is beneficial?

The data collector enables you to adjust the scope of data collection to suit your test and production environments. The data collector also uses a data warehouse, a relational database that enables you to manage the data that you collect by setting different retention periods for your data.

What details does it capture?

The Data Collector includes three default data collection sets it uses to collect various data from SQL Server instances:

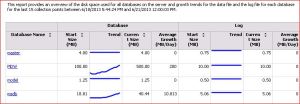

1.) Disk Usage:

Disk space used by databases; Data file & Log file growth rate of a database in a SQL Server 2008 instance.

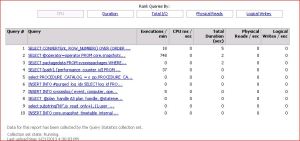

2.) Query Statistics:

Execution count of a query, Total duration for a query execution,

I/O cost & CPU utilization of a query in a SQL Server 2008 instance.

Number of logical disk reads performed by the server on behalf of the event.

Number of physical disk writes performed by the server on behalf of the event.

3.) Server Activity:

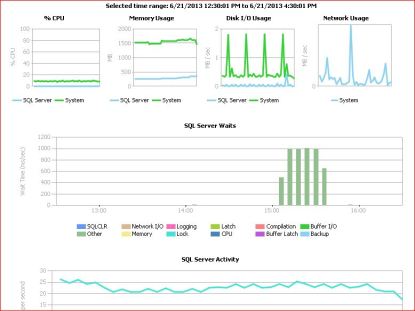

Resource consumption details like CPU,memory,disk i/o & network usage, SQL Server waits, SQL Server activities like (user connections,logins,logouts,transactions,batch requests and SQL compilations & recompilations) for a SQL Server 2008 instance and also for host OS where data collection option is configured.

How to enable Data Collector?

To enable the data collector using SSMS – GUI

– In Object Explorer, expand the Management node.

– Right-click Data Collection, and then click Enable Data Collection.

To disable the data collector–

– In Object Explorer, expand the Management node.

– Right-click Data Collection, and then click Disable Data Collection.

Screenshots showing what kind of reports you will get upon enabling this feature-

Server Activity ::

Query Statistics::

Limitations of the Data Collector:-

1) It can only collect data from SQL Server 2008 (not previous versions).

2) Memory, CPU and disk I/O resources are consumed on the SQL Server instances being monitored.

3) In most cases, a dedicated SQL Server instance is required, if many instances are to be monitored.

4) Customizing data collection sets and reports is not particularly easy.

5) Once installed, it can’t be uninstalled, only disabled.

I hope this information is useful to you and same can be implemented in your respective environments without spending more on 3rd party monitoring tools.

Cheers!

Aman Kharbanda

World of data is changing

Security in SQL Server – Implementing Encryption

Fragmentation – A culprit often blamed for slow server performance

Security in SQL Server – Implementing Encryption

Recently we had a requirement from the application team to enable encryption on their newly built SQL DB Server. This was a new challenge to the team, and I thought of sharing some knowledge which I had gained during the implementation of this new project.

Encryption (TDE) is available only in Enterprise and Data Center Editions of SQL Server 2008. So, if you are using Standard Edition, you will not be able to utilize this key feature in terms of security deployment.

The practice of encrypting and decrypting data is known as cryptography, and

is a common and effective method of protecting sensitive data. SQL Server

provides many cryptography features such as cell-level encryption and

Transparent Data Encryption (TDE) to protect data at rest.

What is TDE (Transparent Data Encryption) –

TDE is a new encryption feature introduced in Microsoft SQL Server 2008. It is designed to provide protection for the entire database at rest without affecting existing applications. Implementing encryption in a database traditionally involves complicated application changes such as modifying table schemas, removing functionality, and significant performance degradations.

How data is encrypted-

When TDE is enabled (or disabled), the database is marked as encrypted in the sys.databases catalog view and the DEK state is set to Encryption In Progress. The server starts a background thread (called the encryption scan or scan) that scans all database files and encrypts them (or decrypts them if you are disabling TDE). While the DDL executes, an update lock is taken on the database. The encryption scan, which runs asynchronously to the DDL, takes a shared lock. All normal operations that do not conflict with these locks can proceed. Excluded operations include modifying the file structure and detaching the database. While normal database writes to disk from the buffer pool are encrypted, log file writes may not be. The scan also forces a rollover for the virtual log file (VLF) to ensure that future writes to the log are encrypted.

How to Enable TDE-

To enable TDE, you must have the normal permissions associated with creating a database master key and certificates in the master database. You must also have CONTROL permissions on the user database.

Perform the following steps in the master database:

1. If it does not already exist, create a database master key (DMK) for the master database. Ensure that the database master key is encrypted by the service master key (SMK).

CREATE MASTER KEY ENCRYPTION BY PASSWORD = ‘some password’;

2. Either create or designate an existing certificate for use as the database encryption key (DEK) protector. For the best security, it is recommended that you create a new certificate whose only function is to protect the DEK. Ensure that this certificate is protected by the DMK.

CREATE CERTIFICATE tdeCert WITH SUBJECT = ‘TDE Certificate’;

3. Create a backup of the certificate with the private key and store it in a secure location. (Note that the private key is stored in a separate file—be sure to keep both files). Be sure to maintain backups of the certificate as data loss may occur otherwise.

BACKUP CERTIFICATE tdeCert TO FILE = ‘path_to_file’

WITH PRIVATE KEY (

FILE = ‘path_to_private_key_file’,

ENCRYPTION BY PASSWORD = ‘cert password’);

4. Optionally, enable SSL on the server to protect data in transit.

Perform the following steps in the user database. These require CONTROL permissions on the database.

5. Create the database encryption key (DEK) encrypted with the certificate designated from step 2 above. This certificate is referenced as a server certificate to distinguish it from other certificates that may be stored in the user database.

CREATE DATABASE ENCRYPTION KEY

WITH ALGORITHM = AES_256

ENCRYPTION BY SERVER CERTIFICATE tdeCert

6. Enable TDE. This command starts a background thread (referred to as the encryption scan), which runs asynchronously.

ALTER DATABASE myDatabase SET ENCRYPTION ON

To monitor progress, query the sys.dm_database_encryption_keys view (the VIEW SERVER STATE permission is required) as in the following example:

SELECT db_name(database_id), encryption_state

FROM sys.dm_database_encryption_keys

What is encrypted-

TDE operates at the I/O level through the buffer pool. Thus, any data that is written into the database file (*.mdf) is encrypted. Snapshots and backups are also designed to take advantage of the encryption provided by TDE so these are encrypted on disk as well. Data that is in use, however, is not encrypted because TDE does not provide protection at the memory or transit level. The transaction log is also protected, but additional caveats apply.

Impact on Database-

TDE is designed to be as transparent as possible. No application changes are required and the user experience is the same whether using a TDE-encrypted database or a non-encrypted database.

While TDE operations are not allowed if the database has any read-only filegroups, TDE can be used with read-only filegroups. To enable TDE on a database that has read-only filegroups, the filegroups must first be set to allow writes. After the encryption scan completes, the filegroup can be set back to read only. Key changes or decryption must be performed the same way.

Database Backup-

When TDE is enabled on a database, all backups are encrypted. Thus, special care must be taken to ensure that the certificate that was used to protect the DEK is backed up and maintained with the database backup. If this certificate (or certificates) is lost, the data will be unreadable. Back up the certificate along with the database. Each certificate backup should have two files; both of these files should be archived (ideally separately from the database backup file for security). Alternatively, consider using the extensible key management (EKM) feature for storage and maintenance of keys used for TDE.

Hope this helps you to get a brief idea on how well we can implement this utility in SQL Server environments.

Aman Kharbanda

Fragmentation – A culprit often blamed for slow server performance

Shedding more light on a frequent check up called ‘Fragmentation’ whenever we get issues on application slowness or bad server performance-

As the data in Microsoft SQL Server tables changes their indexes change. Over time these indexes become fragmented. This fragmentation will adversely affect performance.

Common questions that arrive in our minds is “How is SQL Server’s fragmentation affecting my Server?”

Fragmentation of SQL Server indexes mainly creates wasted space that can affect your server performance much more than one might expect.

Fragmentation of your SQL Server’s internal allocations and page structures result in ‘gaps’ or ‘void’ space that is dead weight carried along with valid data. Your backups, storage, I/O channels, buffer memory, cached data, logs, tempdb, CPU’s and query plans are impacted by these unnecessary voids.

SQL Server’s fragmentation continually eats away at these resources with nearly every update, delete, insert, and table/index change. If ignored, fragmentation can be the proverbial ‘death by a thousand cuts’ to a server’s performance and scalability.

What creates the voids and other adverse effects and how do I get a handle on them?

Typical, day to day activity causes SQL Servers to fragment over time. Changes to your data – inserts, updates, deletes, and even changing varchar values contribute to fragmentation. The full list of actions that cause fragmentation is long and the rate of fragmentation varies across different indexes and tables.

Sometimes there is a pattern resulting from seasonal or annual peaks (e.g., when financials run).

But more often than not, it is difficult to find, predict and proactively manage fragmentation manually.

Look for the index fragmentation levels by using SQL Server DMV’s and perform rebuild/reorganize operation on indexes.

Scheduling jobs on a timely basis, helps to improve the performance in a slight manner, but simultaneously we should look to fine tune the application queries as well (Are they running with bad execution plans) to serve a long term solution and keep the server performance tuned.

Aman Kharbanda

World of data is changing

The world of data is changing-

Changes in the way we do business are transforming the way we interact with data. Businesses face growth in the volume of information and in the types of data they encounter, from increased transactions to unstructured data, image files, and information from new sources such as sensor networks. We’re being asked new types of questions—as businesses go online, we are interacting through new channels that provide a whole new set of data and a greater urgency to move forward in real time. There is a new scope, scale, and diversity to the types and shapes of information that we now need to process, manage, and analyze for business insight.

The proliferation of data extends beyond traditional data types. According to Gartner, the total volume of data worldwide is growing at a rate of 59 percent per year. Furthermore, Gartner estimates that 70–85 percent of data is unstructured.

The dramatic shift from structured to unstructured and complex data types requires organizations to embrace back-end solutions that support complex data types and non-traditional data sources—such as Big Data.

The way we work, and our expectations about how we work, are changing.

With everything we are able to do on the Web and with the emergence of social media, we are discovering, sharing, and collaborating on information in whole new ways. Business intelligence is no different, in terms of how we access information, collaborate and work with others, and build on the work that other people have done.

Aman